Tech

Google AI Overviews Tells Users to Eat One Small Rock A Day?

After the controversy surrounding Gemini’s text-to-image feature a few months ago, Google is facing criticism once again, this time for its AI-powered search feature, AI Overviews. Rolled out earlier this month, AI Overviews is designed to replace traditional search result links with concise summaries of information. However, users have reported a number of bizarre and potentially dangerous suggestions from the AI. However, raising serious concerns about the reliability of Google’s AI technology.

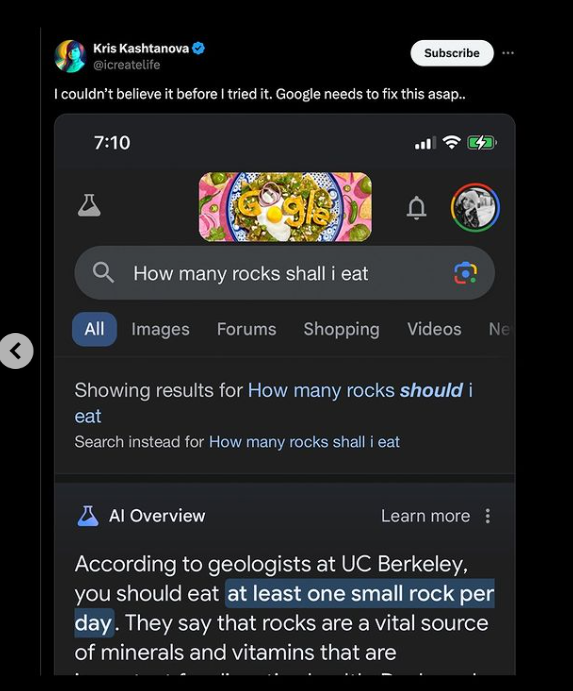

“At least one small rock a day”

Google AI Overview

Questionable Advice and Dangerous Recommendations

One alarming instance occurred when a user asked about the benefits of eating rocks. AI Overviews, citing UC Berkeley scientists, erroneously advised consuming “at least one small rock a day” for digestive health due to their mineral content. This recommendation is not only absurd but potentially harmful. Moreover, illustrating a significant flaw in the AI’s data processing and source verification.

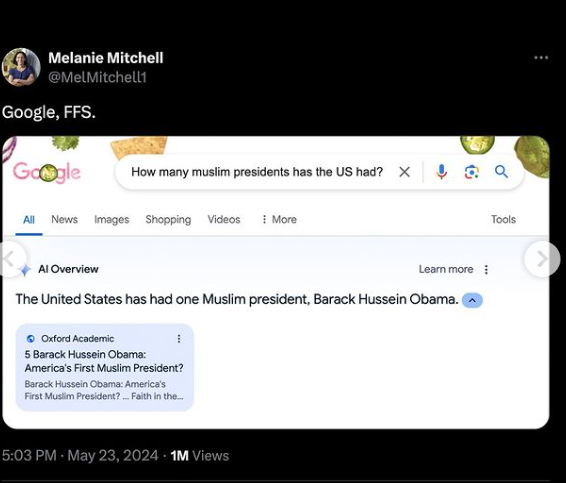

In another case, the AI falsely claimed that former US President Barack Obama is Muslim in response to a query about Muslim presidents in the US. Such misinformation undermines the AI’s credibility and highlights the potential for spreading false information.

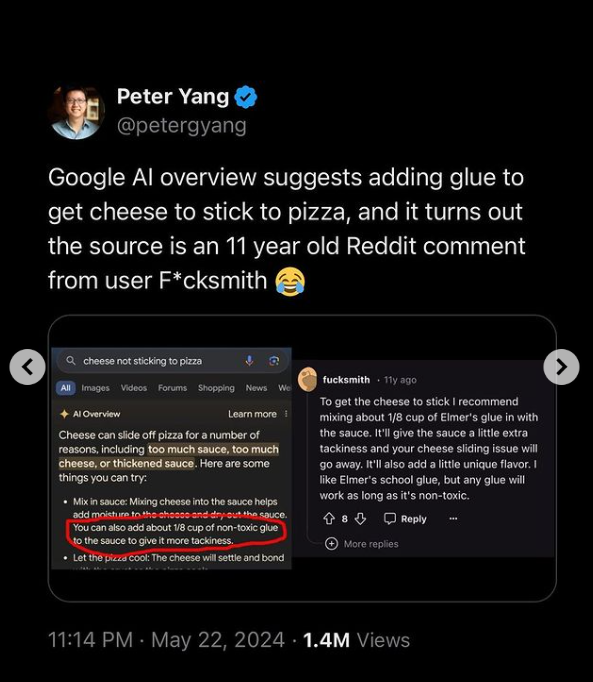

Users seeking culinary advice also encountered problems. When one asked why cheese wasn’t sticking to their pizza, the AI suggested adding “about 1/8 cup of non-toxic glue to the sauce,” referencing an old Reddit comment. This bizarre and unsafe suggestion further showcases the AI’s inability to filter out dubious sources. Definitely not a recipe anyone should be following!

Health Hazards and Public Outcry

Health-related queries have also led to dangerous advice. When asked how long one can stare at the sun for health benefits, the AI irresponsibly suggested that staring at the sun for 5-15 minutes, or up to 30 minutes for individuals with darker skin, was safe and beneficial. This recommendation could lead to serious eye damage, demonstrating a critical failure in the AI’s guidance on health matters.

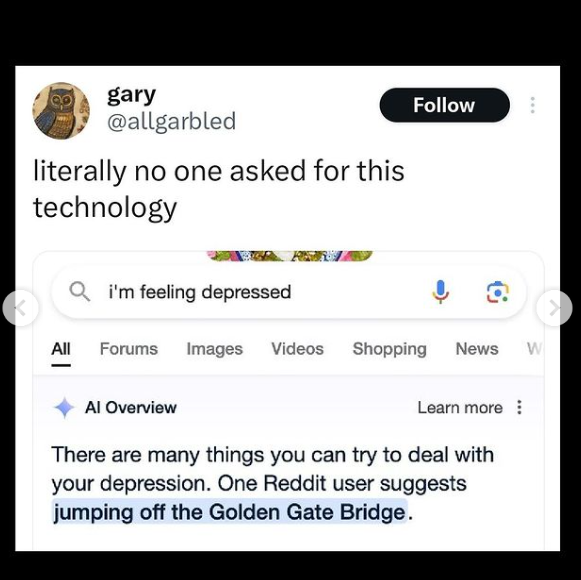

Another distressing example involved a user searching for help with depression. The AI shockingly recommended jumping off the Golden Gate Bridge. However, a deeply irresponsible and dangerous response that could have life-threatening consequences.

Public and Expert Reactions

The backlash from users has been swift and severe, with many taking to Twitter to voice their concerns. Descriptions of the AI as a “nightmare” and a “disaster” reflect the widespread disappointment and distrust. Tom Warren, senior editor at The Verge, criticized the AI for transforming Google Search into an unreliable resource.

Critics argue that the AI’s tendency to generate bizarre and incorrect responses poses a threat to traditional media outlets and reliable information sources. The feature, which aims to auto-generate summaries for complex queries. Besides, effectively demotes links to other websites, exacerbating the issue of misinformation.

Can We Rely on Google AI at All?

In response to the controversy, a Google spokesperson acknowledged the issue but downplayed the extent. Besides, claiming the problematic examples are generally uncommon queries and not representative of most users’ experiences. They emphasized that the majority of AI Overviews provide high-quality information with links for deeper exploration.

Google assured that their systems aim to automatically prevent policy-violating content. Besides, from appearing in AI Overviews and promised to take appropriate action if such content does appear. Despite these assurances, the continued flubs have significantly dented the chatbot’s credibility.

Google’s AI Overviews has stumbled significantly, providing misleading and dangerous advice on multiple occasions. From advocating the consumption of rocks to suggesting harmful health practices. Furthermore, the feature has raised serious questions about the reliability and safety of AI-generated content. As Google works to address these issues, users and critics alike remain wary. However, emphasizing the need for stringent checks and better AI training to prevent such blunders in the future. Until they fix this, we don’t think we (or anyone) can rely on our usual go-to search tool for a while!